Aligning Documentation and Q&A Forum through Constrained Decoding with Weak Supervision

This text was created from my paper titled “Aligning Documentation and Q&A Forum through Constrained Decoding with Weak Supervision” accepted in ICSME 2023. The paper is available here.

Stack Overflow (SO for short) is a popular Q&A forum for software developers. It contains a wealth of information on a wide range of programming topics, but it can be difficult to find the relevant information quickly and efficiently. Moreover, SO users often reference external websites like blogs, tutorials, and documentation in their answers. SO plays a supplementaty role to official documentation (DOC for short) by offering practical examples. From our preliminary analysis, we found that 53 out of 200 sampled StackOverflow answers included at least one documentation (DOC) link. The process fo simultaneously searching for relevant documentation and StackOverflow posts is time-consuming and inefficient due to their disconnected nature.

To solve this problem, we proposed DOSA (short for Documentation & StackOverflow Alignment), a novel approach to turn LLMs into a domain-specific retriever capable of determining the specific section within the DOC to which a given SO question belongs.

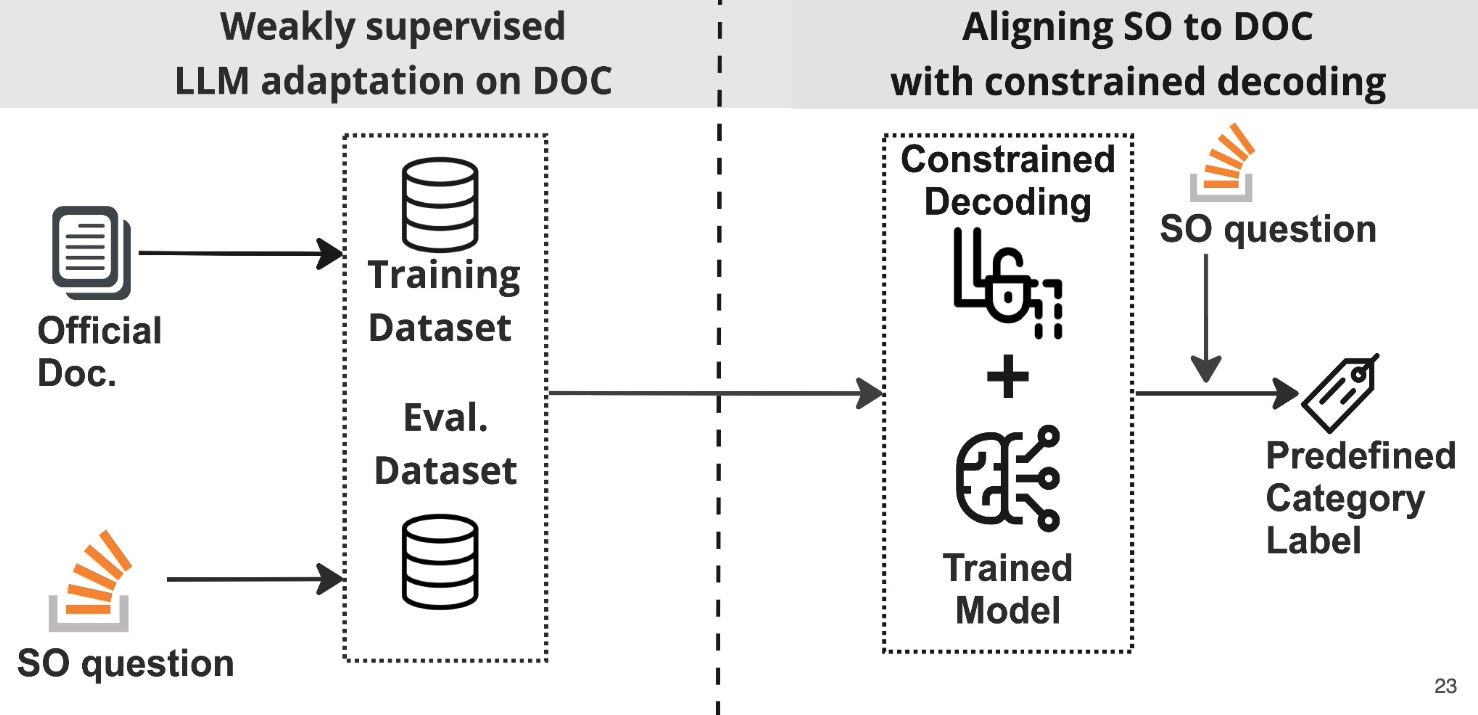

Here is the overview of our approach.

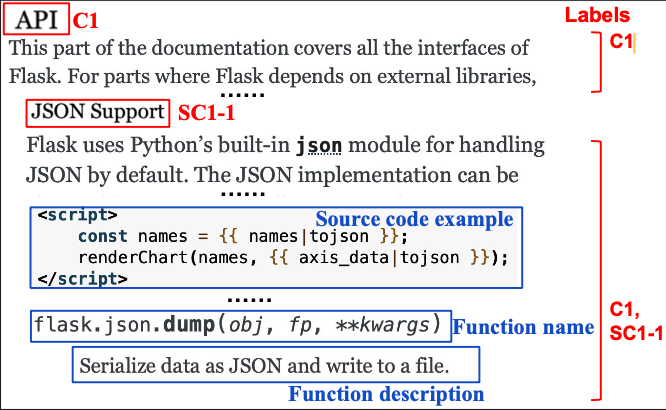

We first extract categories and subcategories from the documentation. Here is a snippet from the Flask documentation,

Section headings categorize text within them, like ‘API’ as the top-level category and sub-headings as sub-categories, such as ‘JSON Support.’ Text within the API category is labeled ‘API,’ and text under the JSON Support sub-category is labeled ‘JSON Support’ as well as ‘API.’ We group three sentences together to create an example and continue this pattern to form a tree-like structure. As a starting point, we chose two popular topics, Python and Flask, to study the feasibility of our method.

We then collect StackOverflow posts related to Python and Flask for our evaluation. We randomly sampled 200 posts from the Stack Overflow dataset, focusing on both the ‘Python’ and ‘Flask’ tags. Two of our authors independently labelled these posts to specific topics within Python or Flask documentation, using categories and subcategories extracted from the documentation as labels.

We then fine-tune the LLM on the documentation corpus. We use the fine-tuned LLM to generate labels for StackOverflow posts. There is no existing dataset mapping between StackOverflow questions and documentation. Conventional ML approaches require extensive human-labeled data, which isn’t available. That’s why DOSA uses LLMs to perform this task in a zero-shot manner, leveraging their language comprehension capabilities fine-tuned on Documentation to establish connections to StackOverflow questions without explicit training data.

The first part of our DOSA approach involves weak supervision. We fine-tune the model using data scraped from documentation. Later, we test it on a manually labeled dataset of StackOverflow questions. While our training and evaluation data differ, they share similarities. This demonstrates the essence of weak supervision, where the model learns from text extracted from documentation and applies this knowledge to label Stack Overflow questions using categories and subcategories from the documentation

When given an input of our stack overflow question on posting json in flask the expected category and sub-category from the documentation are predicted. LLMs select one token from its entire vocabulary at each decoding step to generate the final output. weak supervision alone is not enough to align stackoverflow questions to documentation categories and sub-categories due to hallucinations from LLMs. so, constrained decoding is introduced to limit the LLM’s vocabulary. Constrained decoding incorporates domain terminology, ensuring that generated tokens align with the labels extracted from the documentation. constrained decoding only affects the generation process and doesn’t impact the fine-tuning process in any way.

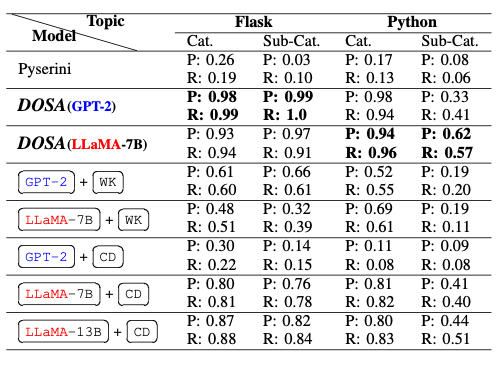

We evaluated DOSA on two documentation sites, Python and Flask. We compared DOSA to baselines such as Pyserini, a popular information retrieval technique, and LLMs (GPT-2 and LLaMA) including the performance of each component of DOSA (i.e., weak supervision and constrained decoding). We used the Precision and recall scores to evaluate the performance of each approach.

Our approach offers a practical solution to the persisting problem of aligning SO to DOC, providing a crucial bridging mechanism between advanced LLMs and the realm of precise information retrieval. We established a foundation for aligning various documentation resources within the same domain. Future work can capitalize on these findings to not only amplify the generalizability, performance, and utility of the proposed approach but also to extend its application to broader domains beyond programming. Exploring innovative methods for categorizing diverse types of technical documents is also a promising avenue for future exploration.

References

2023

Enjoy Reading This Article?

Here are some more articles you might like to read next: